How to build an application that scales

A primer for small dev teams

An application that ‘scales’ is one that is performant even as load increases. A well designed application can scale to extremely high concurrent users; usually there’s one weak link in the chain (typically the database) that fails to scale past a certain point, and forces a significant architecture change.

But realistically, no one reading this article will ever hit that limit. So let’s look at what it takes to set up an application that can scale to, say, 10K concurrent users.

The frontend

This is dead simple. Your frontend should scale indefinitely with no problems. If it doesn’t, you screwed up really badly. There are two scenarios with very simple architecture to review.

In scenario A, you are every web3 frontend developer that has ever existed, and you used Vercel. You probably didn’t need to use Vercel, but you’re too lazy to set up any kind of CICD pipeline. Anyway, Cloudflare will sit between your users and Vercel, caching the data and letting you scale to serve your HTML/CSS/JS to all of CT. If for some reason you scale up to serving all of the planet, you might need Vercel to additionally handle autoscaling itself for you.

In scenario B, you are too cheap to pay $20 a month for Vercel so you stick your HTML/CSS/JS in any kind of Cloud Storage solution. These are a dime a dozen and they’ll all do the trick, because again, Cloudflare is doing all the work.

The backend

This is where things need a bit more design. Although it depends from application to application, typically there are two main types of backend server.

The application server receives requests, processes them, potentially fetches data or sends requests elsewhere, and finally responds to the user.

The database server holds the state for your application. It stores state (sometimes as ‘rows’ of data) in a format that can be quickly looked up by a key value. So a web3 app might store stuff like your preferences, previous interactions, etc keyed by your wallet address.

Before we look at how to architect this, lets look at some ways NOT to architect it.

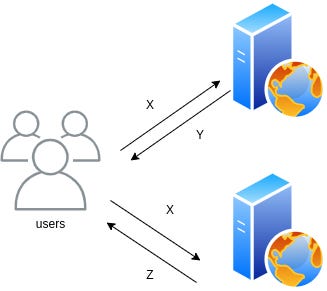

Antipattern: stateful application server

Users send requests to your app server, and they ultimately send responses back. Your app server might need to query a database, send a transaction, whatever. But once it’s done, it’s done.

If that request changes state in the server in some way (other than metrics or logs) you screwed up. If you have two application servers running, they should be completely fungible.

Any time you store state in the application server you’re reducing your ability to scale.

Some advanced use cases might use sessions for performance, those are out of scope for this article. You don’t need it.

Antipattern: singleton application server

This is really just an extreme extension of the previous problem. If you have a server, and there can only be one of those servers running at a time, YOU SCREWED UP REALLY BAD.

There’s no way to scale if you have singletons (other than vertically, with a bigger server). There’s no reliability (what happens if you have maintenance).

Generally you don’t need singleton servers. If you do, there is (almost) always a different solution, typically something that involves message queuing. If there’s not (I don’t believe you) you should have a very tiny server for exactly that singleton purpose, and no more.

A minimal backend setup

Lets get into the meat and potatoes. There are a bunch of components here, I’ll go over them independently, and then raise some additional concerns.

Users

Users can be anywhere, although it’s important to note that America and Western Europe are the most common locations. So if you have to pick regions to host services in, those should be top of mind.

Cloudflare

Once again, you see Cloudflare in the picture. There are alternatives, e.g. GCP and AWS have similar offerings. But you need something to do caching, preferably as close to the customer as possible. That’s not going to be you.

You have to have a good handle on your API surface and think carefully about what you want to cache, and for how long. Letting Cloudflare take care of some of that burden will save serious load on your servers.

Application load balancer

If you want your application to scale, you need to be able to run it on multiple servers. But how do you dispatch the requests to those servers? That’s where the ALB comes in. You can configure it to forward requests from your target URL to specific sets of servers, with various load balancing options: Round Robin, Deterministic Hashing, Utilization, Response Time (I’m not going to go into those, you can look them up).

The ALB is responsible for things like:

Keeping track of what servers are alive

Determining what servers should be sent what requests

Actually forwarding the requests

If your architecture doesn’t have a load balancer in it, you screwed up.

Autoscaling application server

If you want to be able to handle 100x the load, you need at least 100x the compute. That’s just math.

To be able to serve 100x the compute, you need to use a service that will automatically scale up the compute your service is using in response to utilization. Generally servers target 80% utilization, and if that level is reached, a new instance is spawned.

There are a lot of mechanisms to do this, and they typically fall into either the ‘scale instances’ bucket or the ‘scale containers’ bucket.

You can use GCP GCE Managed Instance Groups or AWS EC2 Autoscaling for the former, but I don’t recommend it. VMs suck.

You should prefer to containerize your application and use a container scaling option such as GCP Cloud Run or AWS Elastic Container Service. Containers are designed to be fungible and easily deployable, they’re perfect for autoscaling applications.

Database cluster

What I spec’d out here is overkill, but if you really want to prep for scaling, you should have N read-only replicated databases, and one read-write database that’s only used for mutating queries. You can start with 1 read replica, and scale up as needed.

If you’re using AWS RDS, you can set it in serverless mode, which will let you scale up and down as necessary on the fly. If you’re using Google Spanner, well, 70% of Google runs on Spanner, so I think you’ll be fine.

Other considerations

Although the things I described above are 80% of what you need to know, there are literally hundreds of things that could be added to the list. Don’t take this as being fully comprehensive; I recommend consulting someone with experience. I’ll tag on a few more items to try and round things out.

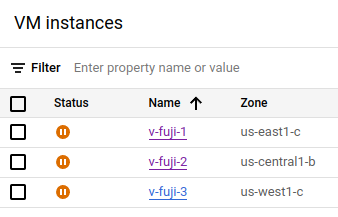

Regionalization

I mentioned this briefly before, but you generally need to pick a region (or zone) to run your services in. Make sure you do not run all your servers in the same region, cloud providers only guarantee SLOs if you shard your service across multiple regions. Every ALB has the ability to balance over multiple regions and you should take advantage of it.

Additionally, it’s just nice in terms of rollouts. If your backends are in 5 regions, you can roll out one region at a time and watch for increasing error rates.

Obviously it depends on how much compute you’re actually using, but 2-3 regions is a good amount to shoot for.

Firewalls / security

You should be extremely careful when designing the network that your services are running in. How you do this is extremely specific to the provider, e.g. AWS uses Security Groups, GCP uses Firewall Rules with Tags.

Personally I find AWS’s Security Groups much easier to reason about than the way GCP does it. You can relatively easily limit the ingress and egress of traffic by protocol/port to your servers.

If you have an architecture diagram it should be pretty clear what ingress paths each server needs. You can limit them so that specific parts of your architecture can only access other parts on specific ports. Here’s an example I’m using:

This is a rule attached to my load balancer that only accepts HTTPS ingress, and only allows egress to the relevant ECS port. The ECS service has a corresponding rule that only allows ingress from the load balancer. Tightly locked down.

You may not care to make it this locked down. But 100% make sure that your database is not directly exposed to the internet. This is not a joke. You must not do that. I don’t care that it’s inconvenient for you to log in and run SQL commands.

Logging

This is highly dependent on what service you’re running, but both GCP and AWS have centralized logging mechanisms. You should make sure you’re logging to those from your services, which typically is as easy as writing to standard output. In some cases you may need to put a bit more work into it.

But the logs that get ingested are usually searchable by tags that let you track down what service emitted them, where the code was running, what version, etc. It’s a lifesaver.

Monitoring

You’ll probably find out pretty quickly that your server needs to respond to a heartbeat check of some kind to take advantage of autoscaling. This is pretty simple generally, just add a /healthcheck path that returns OK. If you want to get fancy, some services allow you to export things like current load, which you’ll need to track yourself.

But more importantly, you should be tracking the health of your system as measured by user journeys. You probably know what API calls a user makes as they log in, browse around, send mutating requests, etc.

Unsurprisingly, once again, both GCP and AWS have standard tools for this kind of monitoring. Write probes to make sure your service is returning valid results, in an expected amount of time.

There are also ways to literally instrument your servers so that they report metrics that can be collected and aggregated across your fleet, but that’s a pretty advanced topic.

Containerizing

You probably found this out by now, but most things expect you to build an OCI (Docker) container. This is very easy to do, just suck it up and learn. I’ll even provide you an example magical incantation.

You can do it, I believe in you.

The end

I had planned to include more specifics around mechanically how to set up all these things, but it really bogs down the article. If there’s interest I might go into more details in a future post.